Favorites

Customize ESPN

ESPN Sites

ESPN Apps

Steelers top Dolphins to extend MNF home winning streak to 23

Aaron Rodgers, Steelers snap Dolphins' four-game win streak

Here's what to know from the Steelers' win over the Dolphins on Monday night.

TOP HEADLINES

FLAGG DROPS 42 VS. JAZZ

Cooper Flagg becomes youngest player in NBA history to score 40 points in a game

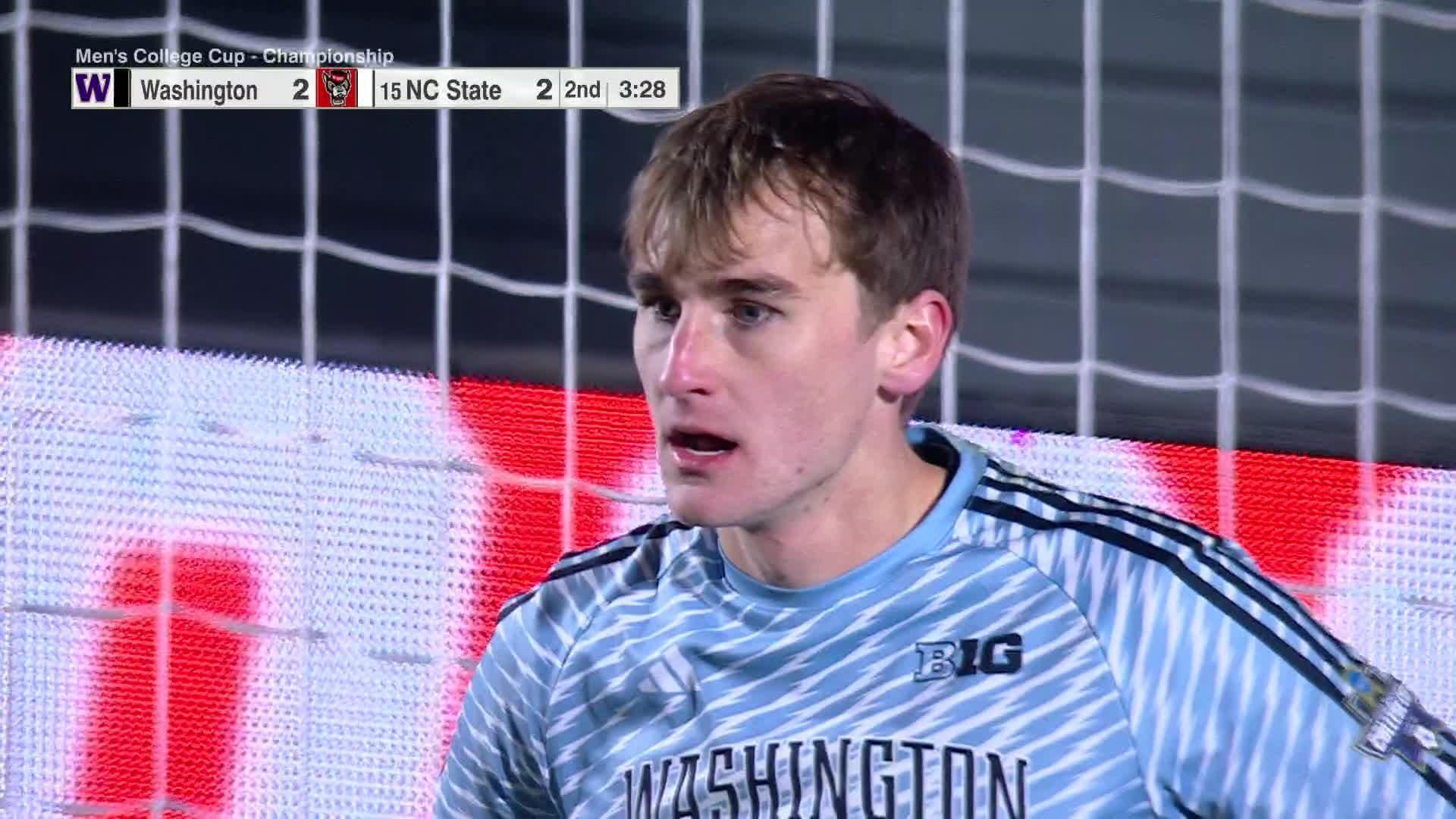

Washington wins first national title on golden goal

BEST OF PEYTON AND ELI

Why Michael Keaton wants to play Steelers' special teams coach Danny Smith

Peyton and Eli show off duck-calling skills to Lainey Wilson

ROOM FOR IMPROVEMENT

What move should your team make? Trade questions for all 30 franchises

With NBA trade season upon us, Bobby Marks examines the conversations happening across all 30 front offices.

Which NBA players can be traded? We have the full team-by-team lists

Bobby Marks runs through every roster, mapping out which players can and cannot be traded before Feb. 5.

SLAMFEST AT TD GARDEN

Isaiah Stewart viciously wrecks the rim

Javonte Green throws down ferocious breakaway dunk

NBA SCOREBOARD

BIG NAMES SIDELINED

Barnwell on two knee injuries with big implications: What now for the Packers, Chiefs?

Micah Parsons and Patrick Mahomes both suffered significant knee injuries Sunday. What do they mean for the Packers' playoff push and the Chiefs' offseason plans?

Stephen A.: It's the perfect time for Travis Kelce to retire

WHAT WE LEARNED

Big questions from Week 15 games: Can Pittsburgh's defense repeat against Detroit? What now for Chiefs?

Our NFL Nation reporters answer big questions from each Week 15 game. Here's what you need to know.

Has Lawrence leveled up? Pats-Bills rematch? Let's overreact to Week 15

Has Liam Coen unlocked the Jags' mercurial QB? Will we see an AFC East rubber match?

Top Headlines

- Chiefs' Mahomes has surgery for torn ACL, LCL

- Texas QB Manning to return for 2026 season

- Interim coach says U-M's players feel 'betrayed'

- Commanders shut down Daniels for rest of year

- Spurs mull bringing Wemby off bench in Cup final

- SS Kim returns to Braves on 1-year, $20M deal

- Washington claims first NCAA men's soccer title

- Jurors hear closing arguments in Skaggs civil trial

- 🔎 Steelers subtly troll Fins after MNF win

Customize ESPN

ICYMI

McCarthy hits Griddy on way into end zone for Vikings TD

J.J. McCarthy dances his way into the end zone after faking out the Cowboys' defense for a Vikings touchdown.

NFL Playoff Machine

Mark J. Rebilas/USA TODAY Sports Simulate playoff matchups

Predict playoff pairings by selecting the winners of the remaining regular-season games to generate potential scenarios.

Sounding Off

Stephen A. emphatically declares Cleveland not the right place for Shedeur

Stephen A. Smith explains why the sooner Shedeur Sanders leaves Cleveland, the better it will be for his NFL career.

Trending Now

Ian Johnson/Icon Sportswire Way-too-early look at the 2026 Heisman Trophy race

We size up next year's most likely candidates for college football's highest individual honor.

ESPN NBA 25 under 25: Ranking Wemby, Flagg, Cade and the next wave

Which youngsters are among the next class of superstars? Which are already there? Our NBA experts rank their top 25 under 25.

AP Photo/John Raoux Dodgers, Blue Jays could rule winter after epic World Series

Baseball insiders were abuzz over L.A. and Toronto at this week's winter meetings, as both have the money -- and pull -- to dominate the winter.

Get a custom ESPN experience

Enjoy the benefits of a personalized account

Select your favorite leagues, teams and players and get the latest scores, news and updates that matter most to you.